Migrating from Temporal to a Postgres-based task orchestrator

How and why we migrated millions of tasks from Temporal to a Postgres-based scheduler.

At Nango, we help engineering teams build product integrations (connecting your product with Salesforce, Google Calendar, Slack, etc.). Over the last year, the usage of our platform has grown more than tenfold, requiring us to continuously upgrade our stack.

Recently, we replaced a core part of our infrastructure that schedules and runs interactions with third-party APIs.

Historically, we used Temporal for this task, but we have now implemented our own queuing and scheduling system on Postgres.

In this blog post, we will walk you through our decision-making process, how we built our new queue and scheduler on Postgres, and how we migrated millions of executions in production without any downtime for our customers.

Where We Started

Nango has been using Temporal since day one to schedule and run interactions with third-party APIs on our infrastructure. These are things like syncing HubSpot contacts, creating linear issues, and syncing employees from Workday.

Many of these interactions are pre-built by Nango, but our users can also modify existing ones and write their own interactions.

When we run interactions, we are (often) running untrusted user code.

Why We Moved Away from Temporal

Unfortunately, we could never leverage Temporal's key feature: Seamless resuming of failed workflows. This was largely due to the fact that we were running untrusted user code.

This reduced Temporal, an otherwise really impressive and interesting product, to a pretty expensive and complex queuing and scheduling system for us. Clearly, we were not using the best tool for our job.

The dependency on Temporal has also become an increasing barrier to adoption by our Enterprise customers. Few of them had experience with Temporal, which slowed down security and compliance reviews and was a burden with large organizations self-hosting Nango.

How We Evaluated Different Alternatives

We started by listing our requirements:

- Stage appropriate & simple: We have a significant load on this system today but are not at hyperscaler scale. We were looking for a simple solution that could scale with us for at least the next 18 months and deal with at least 10 times more load than we see today.

- Minimal dependencies: Reuse our existing technologies (Postgres, Redis, ElasticSearch) as much as possible.

- Flexibility & control over how schedules run: This infrastructure is at the core of how our product works. We wanted to stay flexible if our needs changed in the future.

We mostly looked at three options:

- Using a system like Hatchet, which builds queues on Postgres.

Their blog post on multi-tenant queues is fantastic and was a great inspiration for us. Unfortunately, Hatchet has many features we don’t need today and comes with additional dependencies besides Postgres (especially RabbitMQ), which we wanted to avoid. - Postgres queuing libraries like PGBoss.

Some key features we required were missing: No heartbeats, no sub-queues, only cron-style scheduling with no advanced control over how schedules are executed (e.g., only run if the last instance of the job has finished running). But it was a nice reference implementation for the core queueing logic. - Building a queue and scheduler ourselves on Postgres.

We were hesitant at first. The complexity of concurrent access didn’t seem like something we should build ourselves at this stage. But it turns out Postgres makes it very easy. Ultimately, we decided to at least explore a proof of concept of this option.

Building our queue on Postgres

Creating our own queue and scheduler on Postgres was easier and faster than expected.

Our queue is a simple table in Postgres:

- Each entry is a task that needs to run.

- A column identifies the state of the task (waiting, running, finished).

- A last state changed column lets us identify stale tasks.

- A column that assigns the task to a queue.

It looks something like this:

New tasks are queued with a simple insert.

Consumers use an endless loop to periodically check for new tasks, dequeue, and process them. To avoid race conditions between many consumers, we use the built-in locking mechanism for Postgres SELECT statements:

SELECT ... FOR UPDATEtells Postgres that we are looking to update the rows we select. This will lock them to prevent concurrent access until our update transaction completes.SKIP LOCKED ROWSin the same statement tells Postgres to skip any locked rows from the query and not return them.

The full SQL statement for dequeuing tasks looks something like this:

This solution is both very simple and flexible. We have full control over the WHERE statement and can prioritize tasks based on any metric in our database (e.g., ensure fairness between accounts or different integrations in Nango).

Nango is fully open source. If you want to take a closer look you can find the full implementation of our queue (~300 lines of Typescript) here.

Implementing a Scheduler in Postgres

The scheduler builds on our queue and adds the ability to schedule tasks to run at a specific time.

We extended our queue table with a column for the scheduled run time of the task:

Scheduling a task to run works the same as enqueuing any other task.

Dequeuing gets slightly more complex: At Nango, we want to make sure only one instance of a series of task is running at the same time:

- We filter for the tasks that should be run now.

- We also check if their previous run (in the same series) has finished.

You can find the full scheduler implementation here on GitHub.

Performance bottleneck

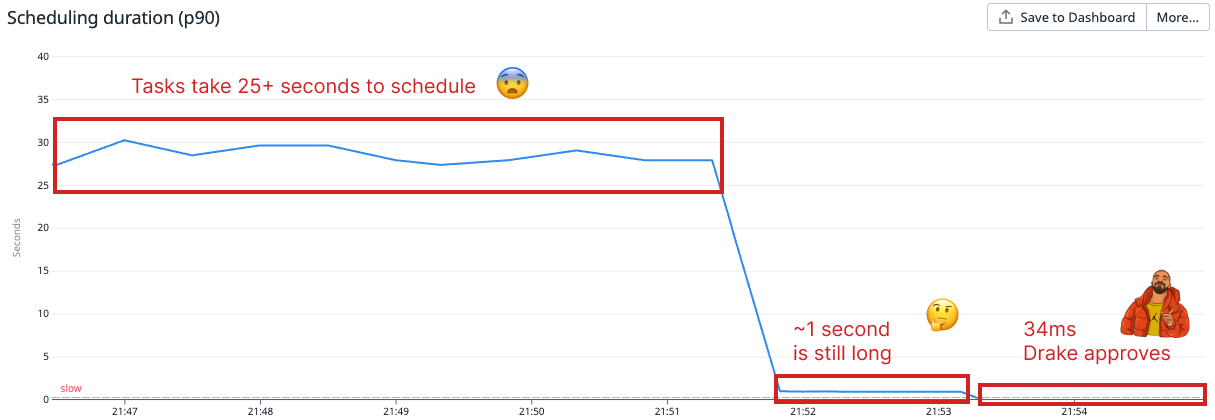

With the scheduler, we ran into an unexpected performance issue.

Some tasks in Nango run as part of a series (group of tasks). Initially, we computed the last run state of the task series as part of the dequeuing query.

But even with a very moderate number of tasks (a few million), this query became very slow. This caused the task rows to be locked for a very long time and ground the whole dequeuing process to a halt, with some dequeuing queries timing out.

Luckily we had metrics setup for this and could quickly catch it.

The solution was simple: Now we store a link to the previous run of the task series with each queued task. Dequeuing is fast and we have easily scaled to many millions of tasks.

Rollout: How We Migrated Millions of Tasks with No Downtime

Our customers rely on Nango to power integrations in their products. If we are down, it means a part of our customer’s product isn’t working, which is a terrible experience.

With this in mind, we created a migration strategy without downtime:

- Dry-run the new queue and scheduler in parallel with the old Temporal setup.

- Switch individual accounts to the new implementation with feature flags.

- Monitor performance and task completions to make sure everything works.

- Enable the new system for more accounts, or switch back to the old system if we identify issues.

- Once everybody is switched over, remove the old implementation.

During the dry-run, interactions were enqueued and dequeued on the new and old systems in parallel, but only the old system actually executed the dequeued interaction. We compared metrics between the two to verify that the new queuing and scheduling works as expected, before actually processing data of production accounts.

Once we were confident that everything works as expected, we first enabled data processing of the new system for internal test accounts. This was followed by some user accounts with only test integrations, then some with production traffic, and eventually all accounts.

We also segmented on the different interaction types to further reduce the scope where problems could emerge with production workloads.

Luckily, everything went well and within about a week all accounts were fully migrated to the new system.

We were pleasantly surprised by how well this migration strategy worked and how little overhead it introduced. We have since used it for other drop-in replacements in our stack.

Conclusion

Thanks to the powerful locking and concurrency primitives of Postgres, we could implement a queue and scheduler without significantly increasing the complexity of the codebase on our side.

We could also remove our biggest and most complex external dependency and gained more control and visibility over a key part of our product.

Once again, we have been impressed by the quality and comprehensiveness of Postgres. We now use it for three very different workloads at Nango, and it has excelled at all three of them:

- Main, relational application database (config, users, etc.)

- Object store for the continuous data syncs of our customer’s integrations (many millions of JSON records)

- Queue and scheduler for orchestrating interactions with third-party APIs (many millions of tasks per month)

If you like working on deep, technical challenges at an open-source developer tool, check out our Careers page: We are hiring remotely!

Last updated on:

March 11, 2025